Computer storage systems have seen a massive evolution and transformation over the last 100 years or so. These changes have been dramatic in relation to the size, price and the access speed of storage. These changes have been possible due to the rapid advances in technology, which was initially slow and took years to evolve but later in the last decade it accelerated due to rapid innovations and concerted/ collaborative efforts of the industry giants. These technology advances have revolutionized the way companies, businesses and consumers use storage technologies today – from the most ubiquitous smart phone devices and personal computers all the way to businesses which now have come to rely more on cloud storage services.

In this article we try to capture the evolution of storage technologies over the years and examine the fundamental technical reason that has accelerated storage evolution over the last decade.

Storage evolution over the last 70 years

(Relative to size, capacity and price)

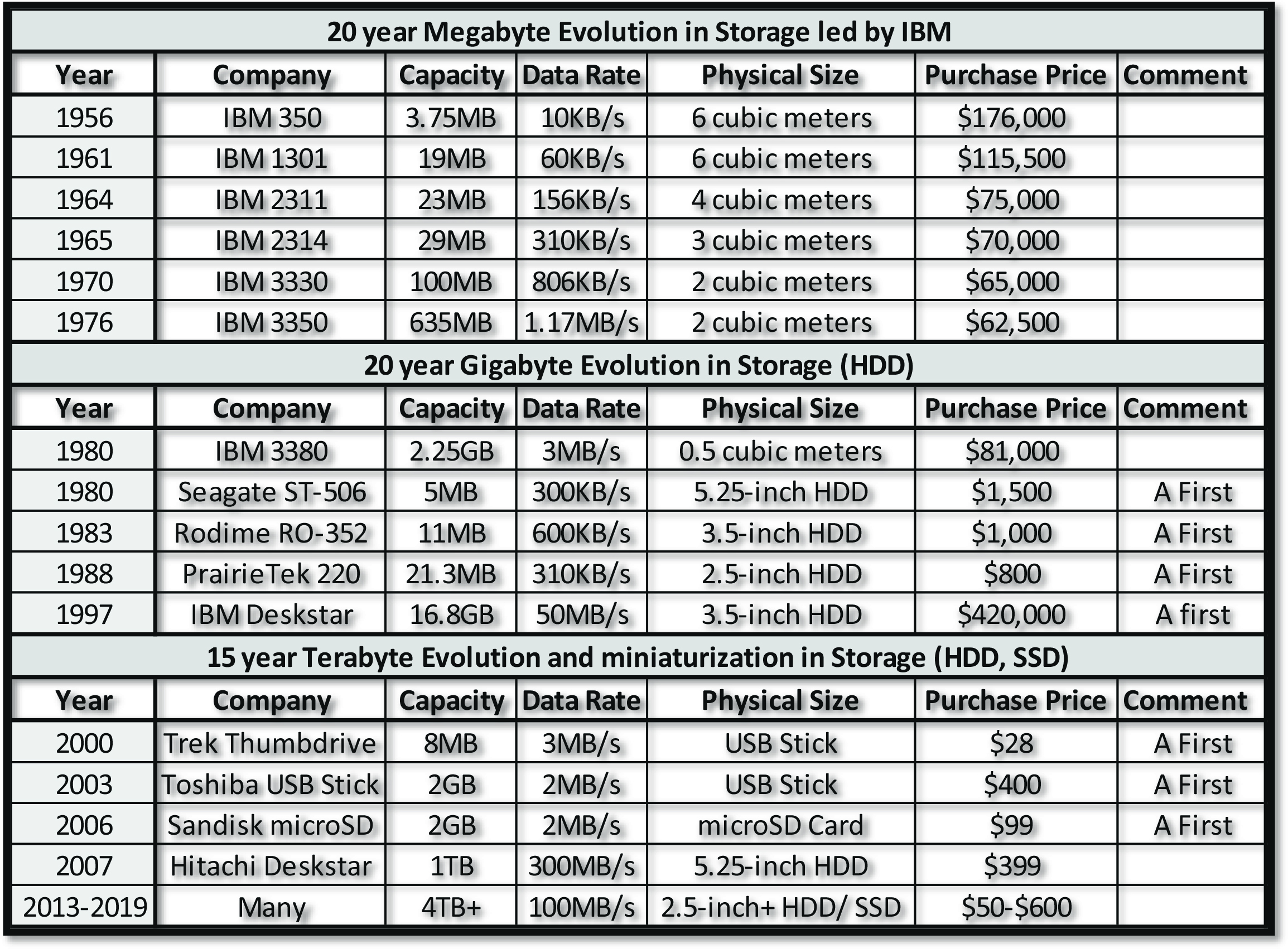

The table below highlights the trends in storage. Initially, the advances in technology were slow, mostly led by IBM in the 60s and 70s in the Megabyte revolution. In the 80s and 90s other companies jumped on the storage bandwagon and started the Gigabyte revolution soon to be followed by the Terabyte revolution. Today there are many physical form factors of storage devices from the traditional mechanical hard disk drives (HDD) to non-volatile memory (NVM) or solid-state devices (SSD); however, the fastest and most dynamic revolution is occurring in the cloud.

Storage technology evolution over the last 100 years

(Another view)

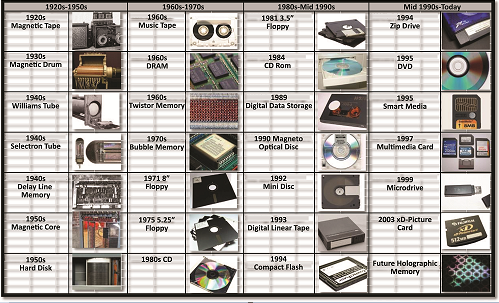

There is another view of understanding the evolution of storage technology as well. The diagram below is a pictorial representation of the other view how storage technologies have evolved over the last 100 years.

The fastest growing storage technology today

Today, most storage systems use some or the other form of a mechanical device for storage which is otherwise known as ‘hard disk drives’ (HDD). HDDs are the dominant technology for several reasons such as very high recording density per platter, more than one platter per HDD, higher rotational speeds up to 15000 RPM for enterprise class drives, and reduced costs due to economies of scale. However, they do have inherent disadvantages for the following reasons – further recording density increase has hit the limits of the physical space, increasing the rotational speed of the platter increases the cost exponentially, and being a mechanical device it is bound to physically fail due to all the moving parts. A single HDD with a single platter at 15000 RPM can at most deliver a transfer speed of 100MB/s for sequential block reads, and for the random reads for the same configuration, transfer speed drops down to as low as 10MB/s

Given the inherent limitations of mechanical storage devices and the rapid drop in prices of non-volatile memory (NVM), NVM is the next revolution in storage. It is found in almost all mobile devices and now continues to replace mechanical devices across the board. There are several reasons for this – they are now cost competitive with HDDs per terabyte of storage capacity and this price parity will continue to erode in favor of NVM in the coming years; NVM technology is far more reliable in the longer term because it has no moving parts; NVM is over 100 times faster than HDD and has similar transfer speeds for both sequential and random reads/ writes unlike HDDs; and micro-second read/ write latency compared to milli-second latency for HDDs.

Companies like Intel and Samsung have now developed NVM technologies that are 3 dimensional – it means increased storage density per cubic measure of volume without any performance degradation.

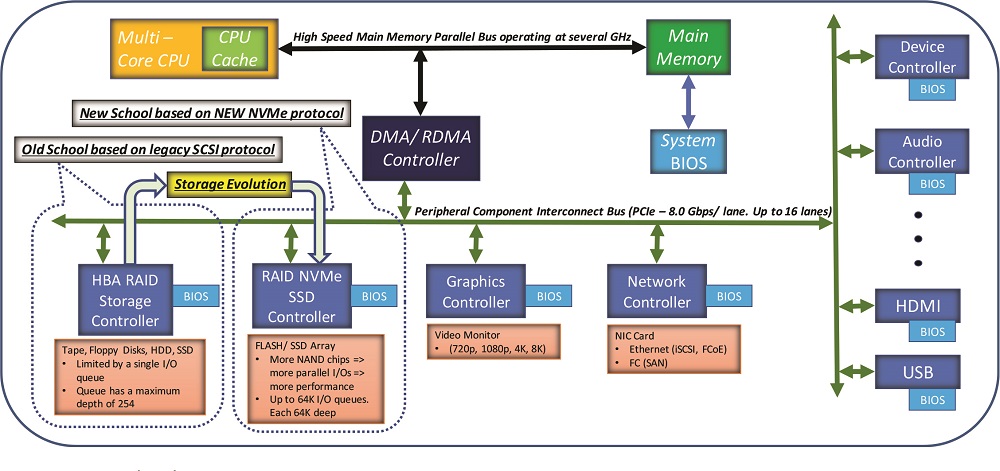

The first system implementations of NVM were found to be SSDs that use the serial ATA protocol or SATA. The reason behind this is very simple. They achieve a 100-fold increase in transfer speeds relative to HDD immediately, without changing the upper level small computer systems interface (SCSI) protocol. The SCSI protocol is over 4 decades old and is used by all I/O protocols such as fiber channel etc. All major operating systems also support the SCSI protocol. So for the industry’s quick gain, it was easy to replace the HDD with an NVM based SSD.

The next disruption was soon born because the SCSI protocol and its associated software stack were very heavy as far as execution time was concerned. The SCSI protocol overhead directly impacted latency of reads and writes which is detrimental to high performance applications such as high frequency trading, small transactions in banking, and numerous database applications for machine learning and artificial intelligence. Thus, an industry working group was formed to address the latency problem associated with the SCSI proto col. The industry came up with a new protocol which takes advantage of the native speed of NVM. They completely eliminated the SCSI protocol to define a new protocol called NVMe (Non-Volatile-Memory Express). In this protocol, the NVM device is directly attached to the I/O PCI express bus – and hence the extension ‘e’ in NVM. This eliminates the need to have the traditional hardware and firmware that resides in a host bus adapter (HBA) along with HBA itself. The following diagram illustrates this concept.

Latest NVM highlights

- PCIe Gen1 is 2.5gbps per lane per direction. Today’s SSDs pack Gen3x2 or Gen3x4 (8Gbps x 2 or 4 lanes = upto 32Gbps) bandwidth in a very tiny M.2 gumstick form-factor.

- 3-D NAND and 3D-Xpoint NAND, DRAM-bandwidth at flash-economies, very low-latency flash (20us IO read/ write) latency compared to 200us latency for enterprise flash.

- New form-factor coming to pack TB of capacity – ‘ruler’ form factor from Intel.

- NVMe enables performance scaling with the increase in capacity – traditionally denser HDD did not bring any performance improvement.

- NVMe over TCP enables low-cost SAN deployment compared to Infiniband, RoCE, iWarp or FC.

- NVMe allows dual-ported drives providing high-availability (same PCIe connector for either gen3/ gen4x4 or 2 separate gen3/ gen4x2 links); and same connector can connect a native SATA drive as well.

Further evolutions in NVMe – Non-Volatile Memory Express Over Fabric (NVMe-o-F)

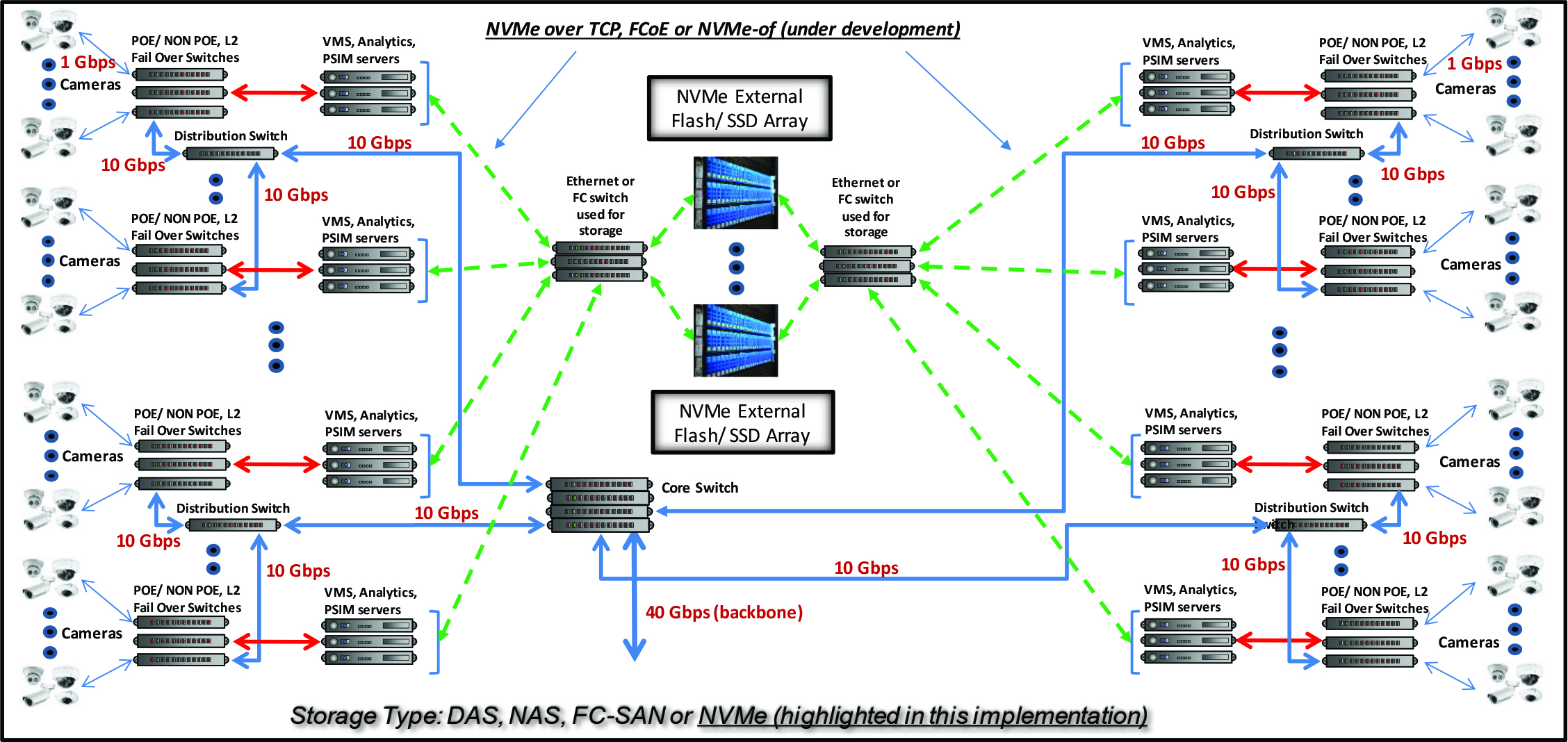

Restricting NVMe inside the box, server chassis has implications for scaling a storage system. Network attached storage (NAS) and storage area networks (SAN) do not have scaling issues. These are field proven technologies for large enterprises with each having advantages and disadvantages in certain type of applications. NVMe-o-F is now about to change the dominance of NAS and SAN storage networks. Several competing technologies are now defining new protocols to replace FC and NAS in enterprise and cloud environments. These are iWarp, FC and RoCE. These technologies intend to use existing ethernet and fiber channel networks to deploy NVMe outside the server chassis. TCP/ IP is also a very old protocol that runs over existing ethernet infrastructure and they too are in the process of extending NVMe outside the server chassis. It is only a matter of time when all storage (green field projects) adopt NVMe-o-F as the preferred storage technology. The following diagram illustrates NVMe-o-F in a CCTV surveillance application.

Data security and data protection

Data security has to do with unauthorized access to data whereas data protection has to do with computer component failure. Both these needs are to be considered as important, and must live together ‘components’ with interrelated recovery policies. As this subject is too vast for discussion, we highlight the main points here:

- Data growth: Sufficient policies must be in place to handle future growth.

- Cyberattack growth: This phenomenon is bound to increase so this also needs to be considered in an overall security policy.

- Cost of data breaches: Must act as a powerful incentive to have a strong security policy and mechanism in place as these breaches can become very expensive.

- Increasing data value: All the more reason to prevent data breaches by having a solid security policy.

Storage vulnerabilities are inherent in storage systems. They include the following:

- Lack of encryption: Data must be encrypted both in transit and at rest.

- Cloud storage: Understand the security offerings by cloud storage providers. All these features come at a cost.

- Incomplete data destruction: All efforts and methods must be implemented to ensure the total destruction of data when it is no longer needed.

- Lack of physical security: This is one of the most common causes of data breaches and is often overlooked. Policies and procedures must be put in place to address this issue as critical data breaches are done by human beings.

Eight data security best practices

- Write and enforce data security policies that include data security models.

- Implement role-based access control and use multi-factor authentication where appropriate.

- Encrypt data in transit and at rest.

- Deploy a data loss prevention solution.

- Surround your storage devices with strong network security measures.

- Protect user devices with appropriate endpoint security.

- Provide storage redundancy with RAID and other technologies.

- Use a secure backup and recovery solution.