Weaponized for Malicious Purposes

GARIMA GOSWAMY

Chief Executive Officer and Co-Founder

Dridhg Security International Pvt. Ltd.

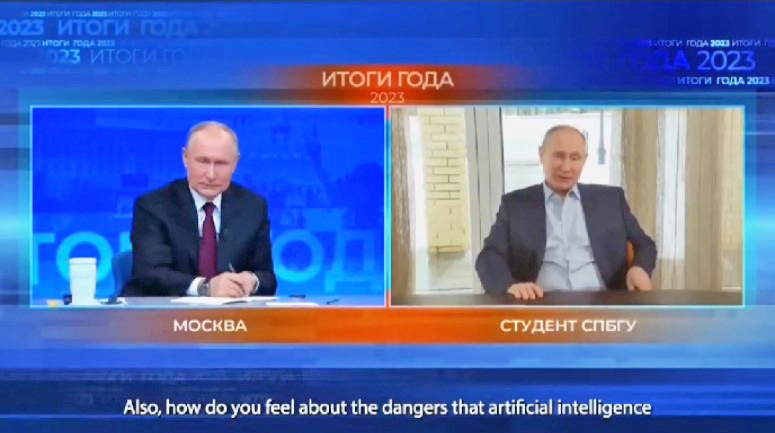

On January 7, 2019, Gabon faced an attempted coup sparked by a suspicious video of President Ali Bango, coinciding with rumours of his failing health. Amidst the Russia-Ukraine conflict in February 2022, a deepfake video depicting Volodymyr Zelenskyy surrendering circulated, sowing confusion. Subsequently, in June 2023, hackers aired a fake televised emergency message supposedly from an AI-generated Russian President Vladimir Putin, declaring Martial law due to alleged Ukrainian incursions into Russia. There is also a viral interview of Russian President Vladimir Putin having a conversation with his deepfake, a student from St Petersburg State University. A snapshot of the same is given below:

In India, a deepfake video of Bharatiya Janata Party’s Manoj Tiwari speaking multiple languages went viral on WhatsApp before the Delhi elections, resembling David Beckham’s multilingual ‘Malaria no more’ campaign.

Initially surfacing in 2018, deepfake technology was exploited by a Reddit user to create objectionable pornographic content featuring celebrities, generating widespread skepticism due to a lack of awareness. More recently, financial frauds leveraging synthetic audio and deepfake videos, often combined with impersonation on video calls, have become prevalent.

What began as a seemingly innocuous tool in the entertainment industry has spiraled into a technology facilitating misinformation, defamation, pornography, and financial fraud through social engineering tactics.

Good DeepFakes

Some uses of deepfake which is not necessarily unethical is the use of text-to-speech for writing audio books for the visually impaired and text-tospeech or speech-to-speech to create synthetic voices, that can be used in the entertainment industry. Then there is the concept of hyper-personalization. Recently, Cadbury used hyper-personalization as an initiative to make the famous Indian actor Shah Rukh Khan a brand ambassador for small businesses. Here videos were created by mixing a deepfake version of the actor along with some shots recorded for the campaign. Now companies like ‘True Fans’ are using hyper-personalization to send customized messages on special occasions at a cost.

Understanding Deepfakes

Deepfakes represent a form of manipulated media, combining ‘deep learning’ with ‘fake.’ Utilizing artificial intelligence and deep learning techniques, deepfakes involve altering or superimposing content onto existing images, videos, or audio recordings to create convincing yet entirely fabricated scenes.

These manipulations often employ auto-encoder convolutional neural networks, which compress input images before reconstruction, or Generative Adversarial Networks (GANs), an unsupervised deep learning algorithm. GANs consist of a generator, which reproduces a specific person’s face, and a discriminator, which identifies flaws in the generated image, leading to iterative improvement until flaws are minimal.

Where Lies the Solution?

Just as proof of the pudding is in eating it, the detector is already present inside the deepfake creation. Also, some cues can help distinguish the real from the deepfake and some credible software that falls under the category of ‘deepfake detectors.’

It is important to sensitize people about the problem of deepfakes and present credible methods to distinguish the real and the deepfake.

For image forensics and authentication of audio and videos, there are some reliable software available. A highly reliable image forensic software, particularly popular with law enforcement agencies in several countries is Amped Authenticate which uses multiple parameters for authentication of images. It conducts file analysis that is used to check the metadata of images. It can also be checked if an image comes from social media. A global analysis involves examining the processing history behind an image. The software through this analysis can demonstrate the presence of resaving, cropping, recapture, and editing. This helps to increase the accuracy of image analysis. Local analysis identifies possible manipulations based on the nature of JPEG file format and the traces left behind by editing software. Using color channels, error level analysis, JPEG ghost maps, and cloned artifacts identification, it is possible to demonstrate areas within an image which has possibly been edited. These are just some of its features.

For detection of synthetic voices, a notable tools which comes highly recommended is ResembleAI’s detector. It is user-friendly and a wav or mp3 format file can easily be uploaded. It has two controls which have to be used – frame length and a sensitivity knob. Frame length controls for the granularity of the model’s analysis. By default, the model analyzes the file in 2-second chunks, then provides a real or fake prediction score for each chunk, and ultimately provides a weighted average score for the entire file. The smaller the chunks, the less likely that the chunk will contain both real and fake data, which ultimately improves the accuracy of the model. The sensitivity knob controls the level of scrutiny and aggression the model applies to its file analysis. The higher the sensitivity the more likely that the model will return a false positive result. Increases in sensitivity are meant to capture any instance of synthetic audio in an audio file, and flag the audio as fake.

While there are several deepfake detectors which are available for checking the authenticity of videos such as DeepWare, DeepIdentify, Sensity, FakeBuster, FakeBuster by Intel, Kroop AI’s detector, Sensity is recommended as it has a high level of accuracy. It is to be noted that among these names, a distinctive feature of Fakebuster is that it can capture live videos and can be integrated with video conferencing platforms like Zoom and Skype. This made-in-India software is available as open-source and was developed in 2021 keeping in mind the risk of imposters in video conferencing particularly as the frequency of video calls increased after COVID-19. If such software was used, the 2024 Hong Kong fraud involving a finance executive who deposited $25 million to imposters after a fake Zoom call could have been prevented.

We can’t only depend on deepfake detectors for this space is evolving regularly and there is a cat-and-mouse chase between deepfake creation software and deepfake detection software. And, no software can guarantee 100% accuracy. It is then important to rely on human intelligence too.

Some warning signs and techniques that individuals can use to identify potential deepfake video content include checking:

- Inconsistencies in facial expressions and emotions.

- Blurring or distortion around edges.

- Inconsistent lighting and shadows.

- Unusual gaze and eye contact.

- Unusual blinking patterns.

- Mismatched lip sync.

- Hair and clothing anomalies.

- Background anomalies.

- Contextual inconsistencies.

- The source and context.

To identify synthetic audio, one must look for slurring, unnaturalness, background sound, overlapping voices, and lack of fullness. It is noteworthy that synthetic voices cannot take high notes.

Legal Framework

Although no specific laws target deepfakes, existing legislation on defamation, privacy rights, copyright infringement, and cybercrimes offer fragmented protection. Suspected deepfake fraud or any fraudulent activity should be promptly reported to the authorities via cybercrime.gov.in or toll-free number 1930. Likewise, objectionable deepfake content created using one’s identity should be reported for removal from search engines via stopncii.org. Now that seeing is no longer believing, we need to be sceptical and vigilant and use a combination of human intelligence and technology when in doubt.

*View expressed in the article are solely of the Author